As artificial intelligence moves from research labs to real-world applications, businesses across industries are racing to adopt machine learning techniques. But developing a machine learning model in a controlled environment differs greatly from deploying it in the real world. While teams may train accurate models, turning them into reliable, production-ready systems requires a coordinated approach.

MLOps is the bridge between data science and production environments. It combines principles of DevOps with ML-specific practices like model versioning, monitoring, retraining, and automated deployment. The goal is to reduce the friction between experimentation and production, helping teams to release strong, reproducible ML models faster and more efficiently. In this article, we explore ten MLOps platforms that can help you ease out deployments, ensure governance, and accelerate your path from prototype to production.

💡 Lepton AI, Nomic AI, and Moonvalley use DigitalOcean GPU Droplets to improve AI inference and training, refine code completion, derive insights from extensive unstructured datasets, and create high-definition cinematic media, delivering scalable and accessible AI-driven solutions.

What is an MLOps platform?

An MLOps platform is a set of tools and services designed to automate and manage the ML lifecycle, from model development to deployment and monitoring. It integrates best practices from DevOps with the unique needs of ML workflows, helping data scientists, ML engineers, and operations teams to collaborate efficiently. These platforms simplify tasks such as data versioning, model training, experiment tracking, CI/CD pipelines, model deployment, and real-time monitoring.

MLOps tools vs MLOps platforms

While both MLOps tools and platforms aim to simplify the machine learning lifecycle, they differ in scope and integration. MLOps tools are single-purpose solutions that address specific stages, such as model training, monitoring, or data versioning. MLOps platforms offer an end-to-end, unified environment that connects all these components in a scalable and automated workflow.

| Feature | MLOps Tools | MLOps Platforms |

|---|---|---|

| Scope | Focused on individual tasks (e.g., training, deployment, tracking) | Covers the entire ML lifecycle end-to-end |

| Integration | May require manual integration with other tools | Comes with built-in integration across components |

| Scalability | Limited to the tool’s function | Designed for scalable, production-grade deployments |

| Examples | TensorFlow, Hugging Face Transformers, XGBoost | Vertex AI, AWS SageMaker, Azure ML, Domino Data Lab |

How to choose your MLOps platform?

With so many MLOps platforms available, finding the right one to help build your AI product might feel overwhelming. The best choice depends on your team’s workflows, skill sets, infrastructure, and deployment goals.

1. Integration with your existing stack

Look for a platform that integrates smoothly with your current tools, whether it’s your data pipeline, model training environment, or deployment setup. A well-integrated platform reduces friction and helps you adopt MLOps without overhauling your tech stack.

2. Deployment type

Consider where your data lives and where you plan to execute your models. If you work in a highly regulated industry, you might prefer on-premises or hybrid deployment. But if scalability and flexibility are your top priorities, cloud-native platforms might be a better choice.

3. Automation and CI/CD capabilities

Check how well the platform supports automation for training, testing, and deploying models. A good MLOps platform should let you set up CI/CD pipelines for ML models, supporting faster iteration and reduced manual effort.

4. Experiment tracking and reproducibility

You need to track experiments, hyperparameters, datasets, and results over time. Choose a platform that makes it easy to version your models and datasets so you can reproduce results, compare runs, and debug issues effectively.

5. Pricing features

Some platforms charge per user, per model, or based on compute usage. Make sure the pricing aligns with your usage patterns and that the platform can scale as your AI workloads expand. Consider whether the platform is open-source or proprietary—open-source platforms offer cost flexibility and community support, while proprietary platforms provide more features, but may require long-term licensing commitments. The nature of the MLOps platform, whether it’s open source or proprietary, might impact your team’s flexibility, cost, and control over the ML lifecycle.

6. Proprietary platforms

Proprietary MLOps platforms are commercially developed and maintained solutions that offer fully managed services, enterprise-grade support, and integration with cloud infrastructure. They are designed for ease of use, faster deployment, and scalability, making them ideal for teams that prefer ready-to-use environments and vendor support.

MLOps platforms to streamline your AI deployment

From open-source frameworks to enterprise-grade cloud solutions, we’ve compiled a selection of MLOps platforms to help you find the right fit for your AI deployment needs. Whether you’re looking for the flexibility of open-source tools or the comprehensive managed services offered by platforms like Vertex AI and Amazon SageMaker, these options span the spectrum from startup-friendly to enterprise-scale implementations.

1. Amazon SageMaker

Amazon SageMaker is a cloud-based machine learning platform that brings together AWS’s established ML, analytics, and data services into a single, unified environment. With the evolution of SageMaker into a more integrated experience, users can access and work with data from multiple sources, like data lakes, warehouses, and federated systems, without needing complex ETL pipelines.

Key features

-

Centralized development environment (SageMaker Unified Studio) that supports shared notebooks, integrated tools, and collaborative workflows across teams.

-

Lakehouse architecture that allows you to unify and query data from Amazon S3, Redshift, and third-party sources using a single copy of data with zero-ETL integrations.

-

Built-in support for developing custom generative AI applications using foundation models, accelerated by services like Amazon Bedrock and Amazon Q Developer.

-

Enterprise-ready governance features, including fine-grained access controls, model guardrails, data sensitivity detection, and complete lineage tracking across the AI lifecycle.

The SageMaker Unified Studio itself has no separate cost, but services accessed through it (like training models or running notebooks) are billed individually. SageMaker includes a Free Tier that offers limited free usage each month for features like API requests, metadata storage, and basic compute units. Components, like Amazon Bedrock, Amazon Q Developer, etc., follow their own separate pricing models.

💡💡Looking for AWS alternatives? With DigitalOcean products, you can leave the burden of complex cloud infrastructure setup behind. Our products are designed to handle the underlying infrastructure, allowing you to focus easily on what truly matters—building and deploying your applications.

→ Take an immersive DigitalOcean product tour to experience the simplicity.

2. Google Vertex AI

Google Vertex AI is a unified, fully managed machine learning platform that simplifies the development and deployment of both traditional ML models and generative AI applications. It integrates tools for model training, tuning, evaluation, and deployment within a single interface, and supports the use of Gemini, Google’s latest family of multimodal foundation models. Vertex AI Studio and Agent Builder provide environments for prompt engineering, fine-tuning, and rapid prototyping.

Key features:

-

Uses ‘Model Garden’ to discover, customize, and deploy over 200 foundation models, including Google, open-source, and third-party options.

-

Trains and serves models using Vertex AI training and prediction, which supports custom code, multiple ML frameworks, and optimized infrastructure.

-

Automates and manages the ML lifecycle with built-in MLOps tools such as pipelines, model registry, feature store, and model monitoring.

Vertex AI follows a pay-as-you-go pricing model, charging based on the resources and tools used, like training time, prediction requests, compute, storage, and generative AI token usage. Pricing varies by model type (e.g., Gemini, Imagen, Veo), usage mode (batch vs. real-time), and selected machine configurations. Additional features like MLOps tools, Agent Builder, and Vertex AI Pipelines have their own usage-based pricing tiers.

3. Databricks

Databricks is a unified analytics and AI platform designed to manage the full lifecycle of data engineering, machine learning, and business intelligence. Built on the concept of a data lakehouse, Databricks combines the scalability of data lakes with the performance of data warehouses, helping organizations to centralize analytics and AI workloads in a single platform.

Key features:

-

Offers a data lakehouse architecture that supports both structured and unstructured data, enabling machine learning and analytics to coexist within a single environment.

-

Provides built-in tools for tracking experiments, deploying ML models, and monitoring performance at scale, supporting an end-to-end AI lifecycle.

-

Supports real-time analytics and ETL workflows for batch and streaming data, supporting a broad range of use cases from predictive modeling to operational reporting.

Databricks follows a pay-as-you-go pricing model, where you’re billed per second based on compute usage measured in Databricks Units (DBUs). You can also opt for committed use contracts to receive discounts across multiple clouds. Storage and network costs depend on your cloud provider and workload configuration.

4. TrueFoundry

TrueFoundry is a modular, cloud-agnostic platform designed for the development, deployment, and scaling of machine learning and generative AI systems on Kubernetes. It provides an end-to-end MLOps and LLMOps solution for teams to build performant AI workflows while maintaining full control over infrastructure, security, and compliance.

Key features:

-

Supports high-performance model serving and training, including dynamic batching, model caching, and integration with servers like vLLM and Triton for low-latency inference.

-

Offers strong observability and access control, with built-in logging, request tracking, rate limiting, and role-based permissions at user, team, and model levels.

-

Provides a unified AI Gateway for managing and routing requests to over 250 LLMs, with centralized key management and support for embeddings, reranking, and prompt management.

TrueFoundry offers a 7-day free trial for users to explore the platform’s capabilities. For larger teams or enterprise deployments, pricing is customized based on specific requirements.

5. Microsoft Azure MLOps

Microsoft Azure provides a comprehensive MLOps platform that supports the full machine learning lifecycle, from model development to deployment and monitoring. Built to integrate with Azure DevOps and GitHub Actions, it helps teams to automate workflows, track experiments, and manage production-grade AI systems with built-in governance and compliance features. Azure’s prompt flow capabilities also support the development of generative AI applications by orchestrating prompt engineering tasks and model pipelines.

Key features:

-

Drives automated training and deployment pipelines with continuous delivery using native integration with Azure DevOps and GitHub.

-

Provides real-time monitoring for model performance, including metrics like accuracy, data drift, and fairness in production environments.

-

Facilitates prompt flow orchestration to design, test, and manage generative AI tasks with reusable components and managed endpoints.

Microsoft Azure offers a flexible pricing model with both pay-as-you-go options and a free tier for new users. After the trial/free limits, users are only charged for the resources they consume, with no upfront commitment.

💡Confused while choosing a Microsoft Azure alternative? DigitalOcean offers comprehensive cloud solutions for startups, SMBs, and developers who need a simple, cost-effective solution that’s tailored to their needs.

Open source MLOps platforms

Open source platforms are free to use and give you full control over customization and deployment. They’re ideal if you have in-house engineering expertise and want to avoid vendor lock-in. You also benefit from a community-driven ecosystem that evolves rapidly. Unlike fully managed platforms, these solutions may require you to take on more responsibility for setup, operations, and upkeep. However, while there are no licensing fees, you may still incur costs related to infrastructure, maintenance, and operational overhead.

6. Kubeflow

Kubeflow is an open-source machine learning platform built on Kubernetes that is scalable, portable, and modular for MLOps workflows. It originated from Google’s internal practices for running TensorFlow on Kubernetes and has evolved into a multi-framework, multi-cloud toolkit for managing every stage of the ML lifecycle. Kubeflow is composed of loosely coupled components like Pipelines, Notebooks, and KServe, which can be used independently or as a unified platform.

Key features:

-

Kubeflow pipelines define, orchestrate, and execute complex ML workflows as reusable and version-controlled pipeline components.

-

Kubeflow notebooks offer browser-based IDEs (like Jupyter and VS Code) directly within Kubernetes pods for collaborative model development and experimentation.

-

Katib supports AutoML functionalities such as hyperparameter tuning, early stopping, and neural architecture search in a Kubernetes-native way.

Pricing information:

Kubeflow is an open-source platform, so there are no licensing or subscription costs associated with using it. However, deploying Kubeflow requires a Kubernetes cluster, which may incur infrastructure costs depending on your cloud provider.

💡Whether you’re a beginner or a seasoned expert, our AI/ML articles help you learn, refine your knowledge, and stay ahead in the field.

7. MLflow

MLflow is an open-source MLOps platform that helps manage the complete lifecycle of machine learning and generative AI workflows. Originally developed by Databricks, it supports experimentation, model tracking, packaging, deployment, and monitoring across a wide range of frameworks and environments. MLflow is widely adopted by organizations looking to unify traditional ML and GenAI processes within a single system that remains framework-agnostic and cloud-agnostic. It integrates with popular tools like Hugging Face, TensorFlow, OpenAI, and LangChain for building scalable and reproducible workflows.

Key features:

-

Uses experiment tracking to Record and compare metrics, parameters, and artifacts from different training runs to understand and reproduce model performance.

-

Centralized storage for managing model versions, stage transitions (e.g., staging to production), and model lineage.

-

Tracks fine-tuning, prompt engineering, and evaluation for large language models with features like the AI Gateway and built-in observability tools.

Pricing information:

MLflow is an open-source platform and is free to use when self-hosted. However, using MLflow through managed services like Databricks may involve usage-based pricing for compute, storage, and other integrated features, depending on the provider’s pricing model.

8. Kedro

Kedro is an open-source Python framework developed by QuantumBlack and now hosted by the LF AI & Data Foundation. It helps data science teams build maintainable, modular, and production-ready pipelines by applying software engineering best practices to machine learning projects. Kedro focuses on pipeline authoring, structuring code, managing configuration, and standardizing workflows.

Key features:

-

Kedro-Viz helps visualize pipelines, offering insights into data lineage and workflow structure for better collaboration and debugging.

-

Data catalog abstracts file and data storage, allowing seamless integration with various file systems and formats, including cloud storage like S3, GCP, and Azure.

-

Supports interoperability with platforms like MLflow, Kubeflow, Apache Airflow, and Vertex AI, for Kedro projects to plug into larger MLOps ecosystems.

Pricing information:

Kedro is an open-source framework and is free to use under the Apache 2.0 license. Since it’s self-hosted and locally run, there are no direct costs, though usage may incur infrastructure or orchestration-related expenses depending on your deployment setup.

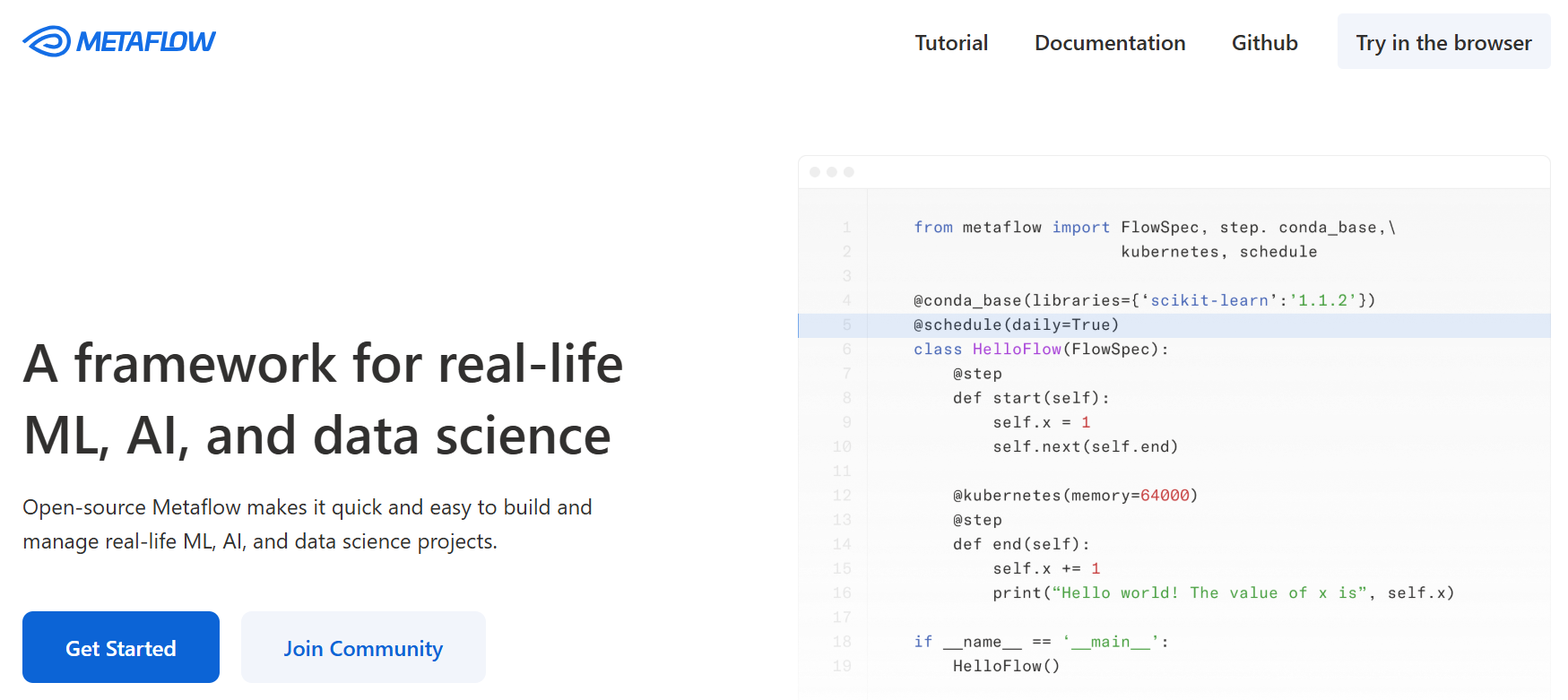

9. Metaflow

Metaflow is an open-source framework designed to simplify the development, management, and deployment of real-life machine learning, AI, and data science projects. Originally built at Netflix (later open-sourced in 2019), Metaflow helps developers and data scientists to prototype locally, scale to the cloud effortlessly, and deploy workflows to production with minimal changes. It integrates with cloud platforms like AWS, Azure, and Google Cloud, and offers flexibility for both individual and enterprise needs. Its human-centric design supports versioning, orchestration, and data handling, making it well-suited for complex, multi-stage ML pipelines.

Key features:

-

With automatic versioning, Metaflow tracks variables and steps throughout the pipeline for reproducibility and easy debugging.

-

Easily scales workloads to the cloud using GPUs, large memory instances, or parallel execution.

-

Transitions workflows from local development to production without needing to modify code.

Pricing information:

While the Metaflow framework is free to use under the Apache License 2.0, deploying it in production environments may incur costs related to infrastructure, such as cloud compute resources, storage, and orchestration services.

Experiment tracking and model management

Tracking experiments and managing models ensure reproducibility, improve collaboration, and provide visibility into model performance over time. They help teams version datasets, monitor experiments, and evaluate models before they’re deployed to production.

10. Weights & Biases

Weights & Biases is an end-to-end AI developer platform designed to support the full machine learning and generative AI lifecycle. It helps developers and research teams build, track, and monitor models and agentic AI applications with greater visibility and control. The platform is composed of modular components like Weave for building and debugging AI agents, and Models for managing experiment runs, artifacts, and model performance. Its ecosystem supports LLMOps and MLOps use cases, making it adaptable for experimentation, reproducibility, and deployment in both research and production environments.

Key features:

-

Experiments and Sweeps allow users to log, visualize, and optimize machine learning experiments and hyperparameters at scale.

-

Model Registry and Artifacts offer structured ways to version, share, and manage machine learning models, datasets, and pipelines.

-

Guardrails and Evaluations provide mechanisms to monitor model behavior and mitigate unsafe or undesirable outcomes in production.

Weights & Biases offers flexible pricing for both cloud-hosted and privately hosted deployments. The free tier includes core features for individual developers, while the Pro plan (starting at $50/month) adds automation, collaboration tools, and priority support. Enterprise plans are customized for organizations needing improved security, compliance, and dedicated infrastructure. Academic users can access the platform for free with unlimited tracking and up to 200GB storage.

References

MLOps platform FAQ

How do MLOps platforms differ from traditional ML tools?

MLOps platforms go beyond traditional ML tools by focusing on the end-to-end machine learning lifecycle, including deployment, monitoring, automation, and governance. While traditional tools assist mainly with model development, MLOps platforms ensure models are production-ready, scalable, and maintainable over time.

What features should I look for in an MLOps solution?

Look for features that support the full ML lifecycle, like experiment tracking, model versioning, automated CI/CD pipelines, and monitoring. Integration with your existing tools, scalability across environments, and governance capabilities.

Are there open-source MLOps platforms?

Yes, platforms like Kubeflow, MLflow, Kedro, and Metaflow are open source and free to use. However, while there are no licensing fees, depending on your deployment setup, you may still incur infrastructure and maintenance costs.

Accelerate your AI projects with DigitalOcean GPU Droplets

Unlock the power of NVIDIA H100 GPUs for your AI and machine learning projects. DigitalOcean GPU Droplets offer on-demand access to high-performance computing resources, enabling developers, startups, and innovators to train models, process large datasets, and scale AI projects without complexity or significant upfront investments.

Key features:

-

Powered by NVIDIA H100 GPUs with 640 Tensor Cores and 128 Ray Tracing Cores

-

Flexible configurations from single-GPU to 8-GPU setups

-

Pre-installed Python and Deep Learning software packages

-

High-performance local boot and scratch disks included

Sign up today and unlock the possibilities of GPU Droplets. For custom solutions, larger GPU allocations, or reserved instances, contact our sales team to learn how DigitalOcean can power your most demanding AI/ML workloads.

About the author

Sujatha R is a Technical Writer at DigitalOcean. She has over 10+ years of experience creating clear and engaging technical documentation, specializing in cloud computing, artificial intelligence, and machine learning. ✍️ She combines her technical expertise with a passion for technology that helps developers and tech enthusiasts uncover the cloud’s complexity.

- Table of contents

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.