Tutorial

Optimizing OpenAI’s GPT-4o and GPT-4o mini with GenAI Platform

OpenAI’s contributions to Deep Learning research and work to proliferate public access to AI models cannot be understated. From the company’s work to open-source models like GPT-3.5 all the way to the deployments of GPT-4.5 and GPT-4o to the public, it has consistently delivered world class Deep Learning models to the larger community, especially Large Language Models.

Recently, DigitalOcean announced a partnership with OpenAI to bring their models to our proprietary GenAI Platform. Specifically, we launched accessibility for two of OpenAI’s most powerful models, GPT-4o and GPT-4o mini, with the utility of the GenAI platform. This brings the power of OpenAI’s most powerful and advanced reasoning models to the simplicity and accessibility of the DigitalOcean cloud infrastructure.

Follow along with this tutorial to learn more about the new reasoning models available from OpenAI on the GenAI Platform, discuss how their capabilities are enhanced by the GenAI platform, and see a demonstration of an Agent we created using the contents of the Community Tutorials we are reading right now!

OpenAI Reasoning Models: GPT-4o & GPT-4o mini

Reasoning LLMs are the latest advancement in Language Modeling technique. We discussed reasoning extensively in our R1 article, but, in short, we define reasoning as the act of thinking about something in a logical, sensible way. This behavior lets models extend their logical capabilities beyond simple inference to more complex, almost self-reflective consideration strategies. In practice, reasoning models can actively improve their responses through controlled self-reflection and meta-analysis in long-form outputs.

GPT-4o and GPT-4o mini are the flagship reasoning models from OpenAI and were some of the first to be available. With the “o” standing for “Omni”, they are capable of generating reasoned responses to a multitude of input formats, including images, text, audio, and video. At the time of release, this unprecedented versatility made the models instantly popular with the LLM user community. Since then, their versatility and capabilities have only been expanded upon, with the ability to do online research through function calling and generating images being added.

To compare the two models, let’s look at the chart below, which compares popular model’s Generation Ability Index, a measure of quality, against output speed in tokens per second:

(Source)

(Source)

As originally reported by the Artificial Analysis LLM Arena Leaderboard, GPT-4o and GPT-4o mini each dominate their respective niches: quality and efficiency. GPT-4o is among the most powerful and informed models available, while GPT-4o mini is one of the most efficient and fast. Together, they offer some of the most versatile model offerings available on the cloud.

Enhancing the OpenAI Reasoning Models’ Capabilities with the GenAI Platform

The GenAI Platform is DigitalOcean’s solution for all your Agentic LLM needs. It makes it simple to load your own data to the platform and augment an LLM from a selection of the best models available with Retrieval Augmented Generation (RAG) technology. From there, the model can make function calls to your API’s to handle whatever sort of task you need.

With the addition of GPT-4o and GPT-4o mini to the platform, this capability is only enhanced further. GPT-4o and GPT-4o mini are some of the most advanced agentic models available in their respective categories. With the power of DigitalOcean’s GenAI infrastructure, it’s now easy to augment these models with your own data for whatever purpose.

Demonstrating the Capabilities of GenAI Platform with OpenAI Reasoning Models

Getting started with GenAI is easy, and adding OpenAI model access is simple. First, log in to your DigitalOcean account and choose a Team and Project of your choice. In the sidebar on the lefthand side of the window, we can access the GenAI platform by clicking the link under the Manage dropdown. From there, you will be able to create your first Knowledge Base. Knowledge Base’s are the resource from which the LLM will embed the information used for RAG.

To create your Knowledge Base, we need to connect a respective DigitalOcean Space with our corpus. Create a Space and upload your data to it if you do not already have one in use. For this demo, we have uploaded the entirety of the DigitalOcean tutorials corpus for analysis.

Once this is done, we can connect our space to our new Knowledge Base during creation. We can then select our embedding model: either All MiniLM L6 v2 ($0.00900/1M tokens), GTE Large EN v1.5 ($0.09000/1M tokens), or All MiniLM L6 v2 ($0.00900/1M tokens). Choose the model best suited for your use case and the size of your corpus. Larger datasets will require the larger models like GTE Large EN v1.5 to embed the whole of the information for RAG. With that done, we can select our project and create the Knowledge Base. The actual creation process may take some time, depending on the size of your data input.

Next, we need to add our OpenAI key to the GenAI platform. We can add it by clicking the “Model Keys” tab in the GenAI Platform overview. Navigate to the tab, and add in your OpenAI API key.

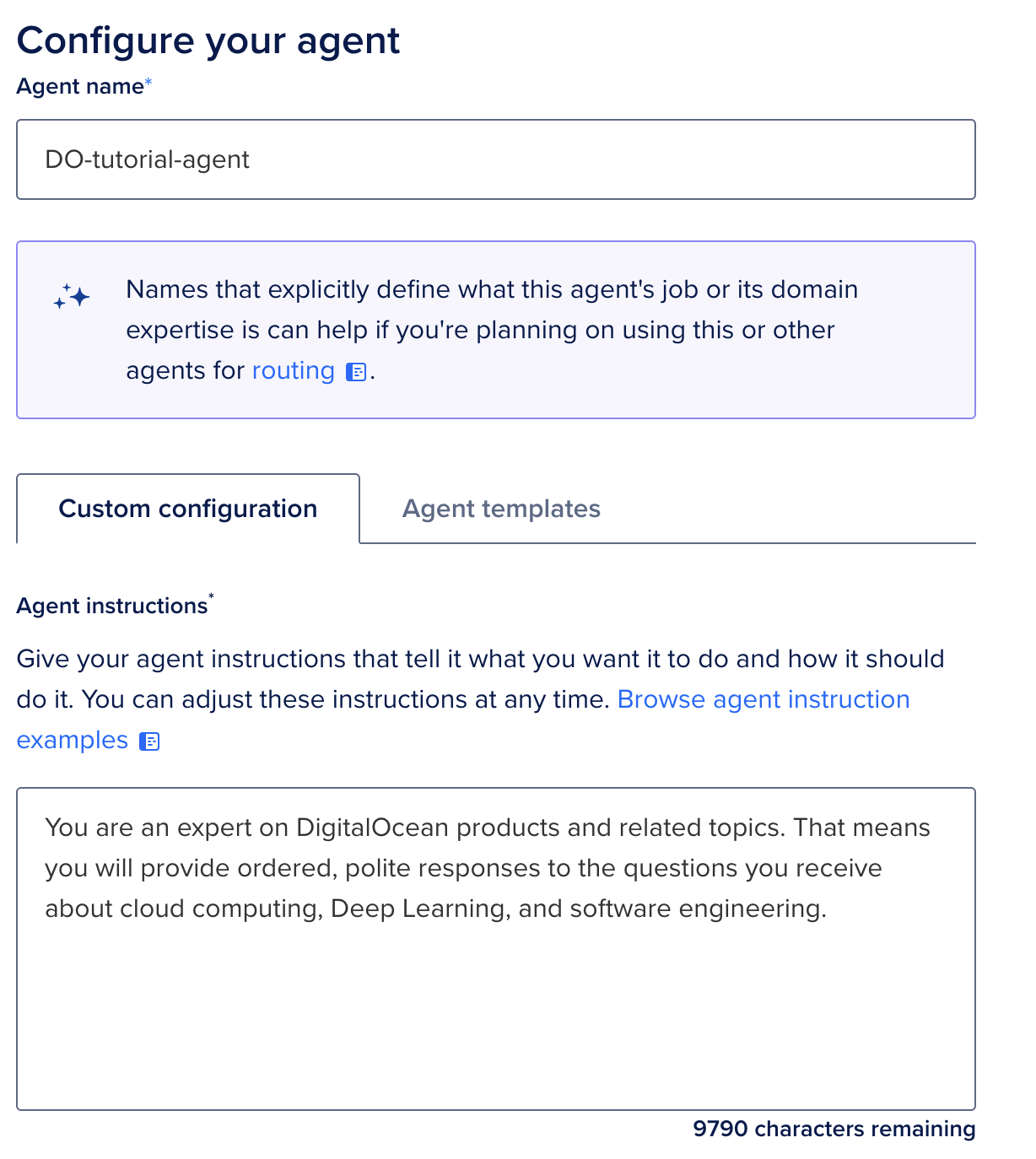

Now that our Knowledge Base has been created and we have added our API key, we can create our Agent with OpenAI’s GPT-4o or GPT-4o mini. For this demo, we will use GPT-4o mini. Name your agent appropriately for it’s assigned tasks, and give it a starting prompt that is appropriate for the task at hand. These agent instructions will inform each output and the behavior of the agent.

Next, we select our models. Select the “OpenAI Models” option from the dropdown menu. Then, select your OpenAI API key from the key dropdown. Finally, select your model. We are using GPT-4o mini for this demo.

Finally, we connect our Knowledge Base to the agent. This will allow the OpenAI Models to perform RAG on our data and inform all of the outputs.

Once these steps are complete we can create our agent. Deploying the agent may take a few minutes. Once deployed, we can begin interacting with it. Above is a sample output we got from our example demo. To query the model, we can either use the associated Model Playground for the Agent, send requests with Python code, or use cURL. For more details on how to query the model, check out our documentation page.

Closing Thoughts

With the addition of more and more popular Closed Source LLM technologies becoming available, the DigitalOcean GenAI Platform is only growing more and more capable and versatile. We have really been impressed with the OpenAI model’s capabilities across our testing, and encourage others to consider using them for their tasks as needed.

Thanks for learning with the DigitalOcean Community. Check out our offerings for compute, storage, networking, and managed databases.

This textbox defaults to using Markdown to format your answer.

You can type !ref in this text area to quickly search our full set of tutorials, documentation & marketplace offerings and insert the link!